龙蜥操作系统作为阿里云开源的操作系统,虽不在安可目录,但其号称与CentOS 8软件生态的100%兼容性,在国产化浪潮下和CentOS停止维护的背景下,已成为替代的理想选择。这一特性使得企业能够在不改变原有应用架构的情况下,实现平滑迁移,既满足了国产化要求,又保障了业务连续性。

环境涉及软件版本信息

- 服务器芯片: 海光3350/兆芯开先KX-5000/Intel

- 操作系统:Anolis OS 8.6/Anolis 8.9

- Containerd: 1.7.13

- Kubernetes:v1.30.12

- KubeSphere:v4.1.3

- KubeKey: v3.1.9

- Docker: 24.0.9

- DockerCompose: v2.26.1

- Harbor: v2.10.1

- Prometheus: v2.51.2

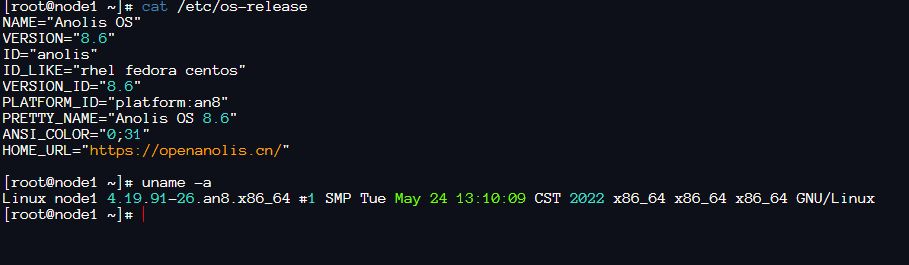

服务器基本信息

[root@node1 ~]# cat /etc/os-release

NAME="Anolis OS"

VERSION="8.6"

ID="anolis"

ID_LIKE="rhel fedora centos"

VERSION_ID="8.6"

PLATFORM_ID="platform:an8"

PRETTY_NAME="Anolis OS 8.6"

ANSI_COLOR="0;31"

HOME_URL="https://openanolis.cn/"

[root@node1 ~]# uname -a

Linux node1 4.19.91-26.an8.x86_64 #1 SMP Tue May 24 13:10:09 CST 2022 x86_64 x86_64 x86_64 GNU/Linux

[root@node1 ~]#

1.说明

作者使用k8s和kubesphere过程中已适配芯片和操作系统如下:

- CPU芯片:

- 鲲鹏

- 飞腾

- 海光

- 兆芯

- 国际芯片:interl、amd等

- 操作系统

- 银河麒麟V10

- 麒麟国防版

- 麒麟信安

- 中标麒麟V7

- 统信 UOS

- 华为欧拉 openEuler、移动大云

- 阿里龙蜥 Anolis OS

- 腾讯 TencentOS

- 国际操作系统:centos、ubuntu、debian等

本文由 [编码如写诗-天行1st] 原创编写,有任何问题可添加作者微信 [sd_zdhr] 获取帮助。

关于我:

- 主要从事后端开发,兼具前端、运维及全栈工程师,热爱

Golang、Docker、kubernetes、KubeSphere。 - 信创服务器

k8s&KubeSphere布道者、KubeSphere离线部署布道者 - 公众号:

编码如写诗,作者:天行1st,微信:sd_zdhr

2.前提条件

参考如下示例准备至少三台主机,其中node1可省略,让master节点即是主节点也是工作节点

| 主机名 | IP | 架构 | OS | 用途 |

|---|---|---|---|---|

| harbor | 192.168.3.249 | x86_64 | Ubuntu24.04 | 联网主机,用于制作离线包,并作为镜像仓库节点 |

| master1 | 192.168.85.138 | x86_64 | 龙蜥 8.6 | 离线环境主节点1 |

| master2 | 192.168.85.231 | x86_64 | 龙蜥 8.6 | 离线环境主节点2 |

| master3 | 192.168.85.232 | x86_64 | 龙蜥 8.6 | 离线环境主节点3 |

3.构建离线包

在node可联网节点上操作

3.1 下载kk

curl -sSL https://get-kk.kubesphere.io | sh -

3.2 创建 manifest 文件

export KKZONE=cn

./kk create manifest --with-kubernetes v1.30.12 --with-registry

3.3 编辑 manifest 文件

vi manifest-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Manifest

metadata:

name: sample

spec:

arches:

- amd64

operatingSystems: []

kubernetesDistributions:

- type: kubernetes

version: v1.30.12

components:

helm:

version: v3.14.3

cni:

version: v1.2.0

etcd:

version: v3.5.13

containerRuntimes:

- type: docker

version: 24.0.9

- type: containerd

version: 1.7.13

calicoctl:

version: v3.27.4

crictl:

version: v1.29.0

docker-registry:

version: "2"

harbor:

version: v2.10.1

docker-compose:

version: v2.26.1

images:

- registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.9

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.31.8

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.31.8

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.31.8

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.31.8

- registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.9.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.22.20

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.27.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.27.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.27.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.27.4

# ks

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-extensions-museum:v1.1.5

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-controller-manager:v4.1.3

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-apiserver:v4.1.3

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/ks-console:v4.1.3

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/kubectl:v1.27.16

# whizard-telemetry

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/whizard-telemetry-apiserver:v1.2.2

# whizard-monitoring

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubespheredev/kube-webhook-certgen:v20221220-controller-v1.5.1-58-g787ea74b6

- swr.cn-southwest-2.myhuaweicloud.com/ks/prometheus/node-exporter:v1.8.1

- swr.cn-southwest-2.myhuaweicloud.com/ks/brancz/kube-rbac-proxy:v0.18.0

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/kube-state-metrics:v2.12.0

- swr.cn-southwest-2.myhuaweicloud.com/ks/prometheus-operator/prometheus-operator:v0.75.1

- swr.cn-southwest-2.myhuaweicloud.com/ks/prometheus-operator/prometheus-config-reloader:v0.75.1

- swr.cn-southwest-2.myhuaweicloud.com/ks/prometheus/prometheus:v2.51.2

- swr.cn-southwest-2.myhuaweicloud.com/ks/kubesphere/kubectl:v1.27.12

registry:

auths: {}

3.4 导出离线制品

export KKZONE=cn

./kk artifact export -m manifest-sample.yaml -o artifact-k8s-13012-ks413-monit.tar.gz

3.5 下载 KubeSphere Core Helm Chart

安装helm

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

下载 KubeSphere Core Helm Chart

VERSION=1.1.3 # Chart 版本

helm fetch https://charts.kubesphere.io/main/ks-core-${VERSION}.tgz

4 离线部署准备

4.1 将安装包拷贝至离线环境

将下载的 KubeKey 、制品 artifact 、Helm文件等介质拷贝至master****主节点。

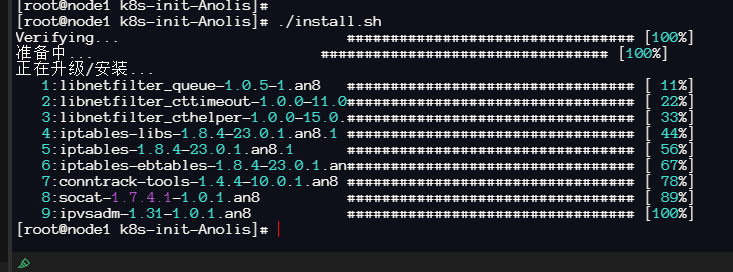

4.2 安装k8s依赖包

所有节点执行,上传k8s-init-Anolis.tar.gz解压后执行install.sh

4.3 修改配置文件

主要修改相关节点和harbor信息

vi config-sample.yaml

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: harbor, address: 192.168.3.249, internalAddress: 192.168.3.249, user: root, password: "123456"}

- {name: master1, address: 192.168.85.138, internalAddress: 192.168.85.138, user: root, password: "123456"}

- {name: master2, address: 192.168.85.231, internalAddress: 192.168.85.231, user: root, password: "123456"}

- {name: master3, address: 192.168.85.232, internalAddress: 192.168.85.232, user: root, password: "123456"}

roleGroups:

etcd:

- master1

- master2

- master3

control-plane:

- master1

- master2

- master3

worker:

- master1

- master2

- master3

# 如需使用 kk 自动部署镜像仓库,请设置该主机组 (建议仓库与集群分离部署,减少相互影响)

# 如果需要部署 harbor 并且 containerManager 为 containerd 时,由于部署 harbor 依赖 docker,建议单独节点部署 harbor

registry:

- harbor

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.30.12

clusterName: cluster.local

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: flannel

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

type: harbor

registryMirrors: []

insecureRegistries: []

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

auths: # if docker add by `docker login`, if containerd append to `/etc/containerd/config.toml`

"dockerhub.kubekey.local":

username: "admin"

password: Harbor@123 # 此处可自定义,kk3.1.8新特性

skipTLSVerify: true # Allow contacting registries over HTTPS with failed TLS verification.

plainHTTP: false # Allow contacting registries over HTTP.

certsPath: "/etc/docker/certs.d/dockerhub.kubekey.local"

addons: []

4.4 创建镜像仓库

./kk init registry -f config-sample.yaml -a artifact-k8s-13012-ks413.tar.gz

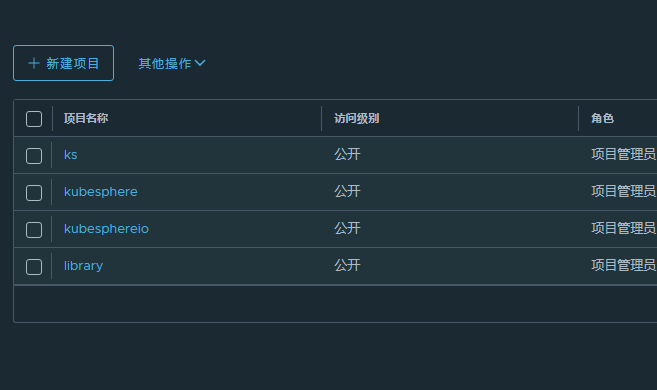

4.5 创建harbor项目

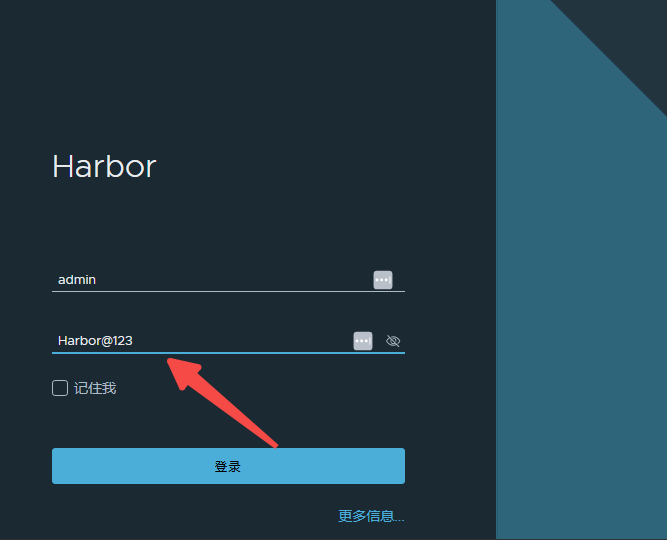

说明:

由于 Harbor 项目存在访问控制(RBAC)的限制,即只有指定角色的用户才能执行某些操作。如果您未创建项目,则镜像不能被推送到 Harbor。Harbor 中有两种类型的项目:

- 公共项目(Public):任何用户都可以从这个项目中拉取镜像。

- 私有项目(Private):只有作为项目成员的用户可以拉取镜像。

Harbor 管理员账号:admin,密码:Harbor@123。密码同步使用配置文件中的对应password

harbor 安装文件在 <font style="background-color:rgb(255,245,235);">/opt/harbor</font> 目录下,可在该目录下对 harbor 进行运维。

vi create_project_harbor.sh

#!/usr/bin/env bash

url="https://dockerhub.kubekey.local" # 或修改为实际镜像仓库地址

user="admin"

passwd="Harbor@123"

harbor_projects=(

ks

kubesphere

kubesphereio

)

for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{ \"project_name\": \"${project}\", \"public\": true}" -k # 注意在 curl 命令末尾加上 -k

done

创建 Harbor 项目

chmod +x create_project_harbor.sh

./create_project_harbor.sh

验证

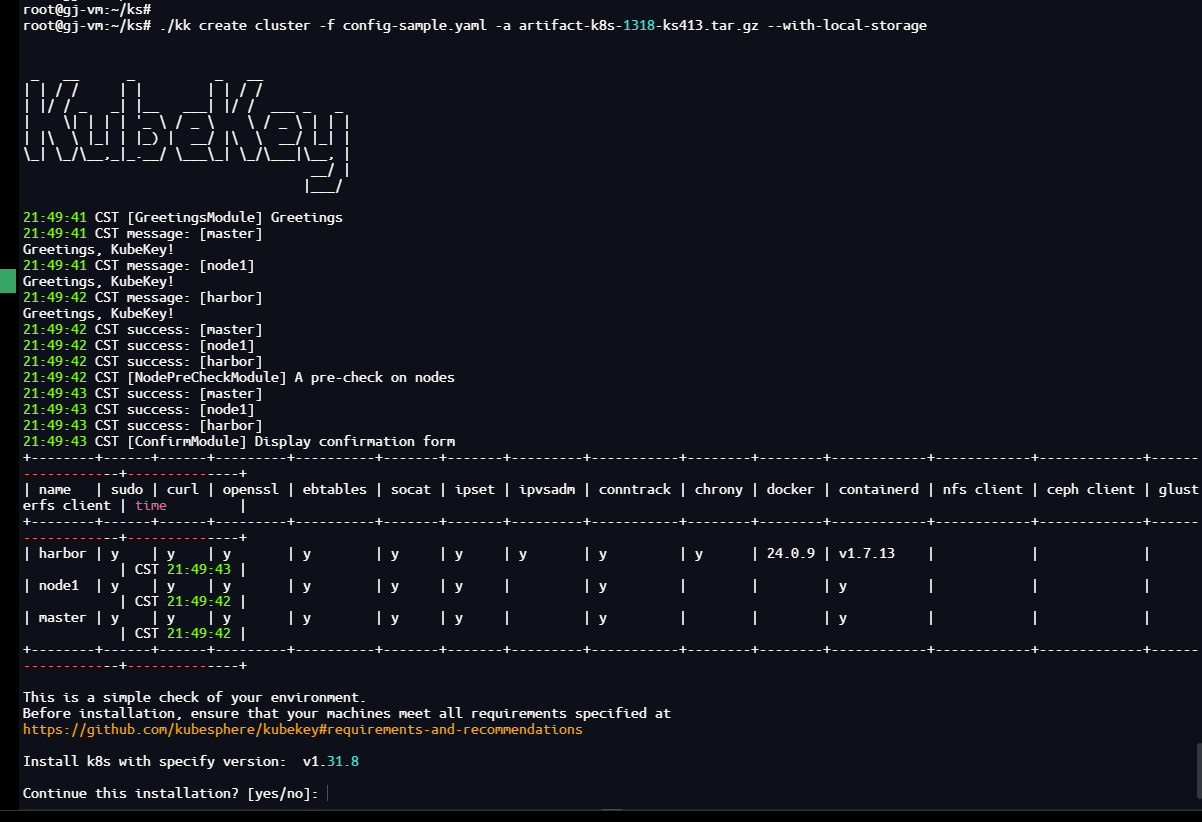

5 安装Kubernetes

执行以下命令创建 Kubernetes 集群:

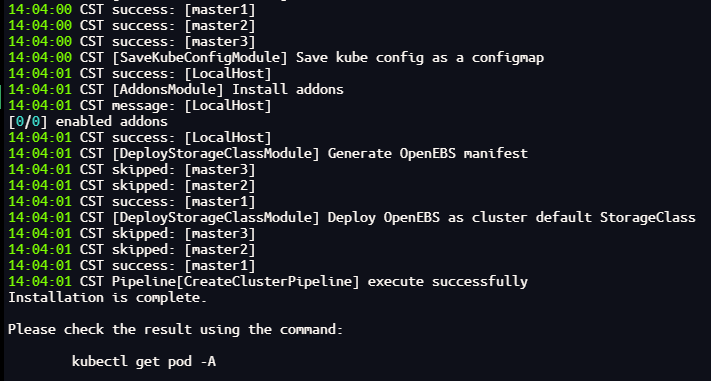

./kk create cluster -f config-sample.yaml -a artifact-k8s-13012-ks413.tar.gz --with-local-storage

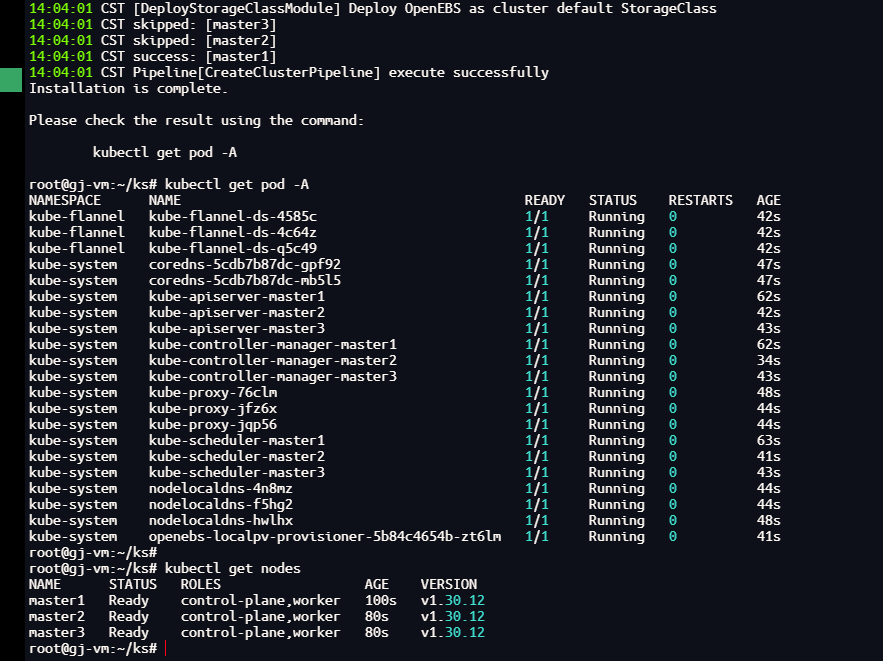

等待大概两分钟左右看到成功消息

验证

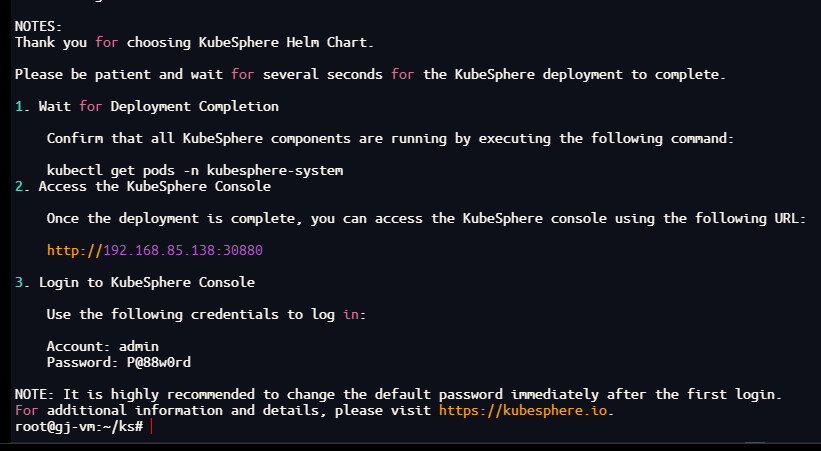

6 安装 KubeSphere

helm upgrade --install -n kubesphere-system --create-namespace ks-core ks-core-1.1.5.tgz \

--set global.imageRegistry=dockerhub.kubekey.local/ks \

--set extension.imageRegistry=dockerhub.kubekey.local/ks \

--set ksExtensionRepository.image.tag=v1.1.5 \

--debug \

--wait

等待大概30秒左右看到成功消息

7 验证

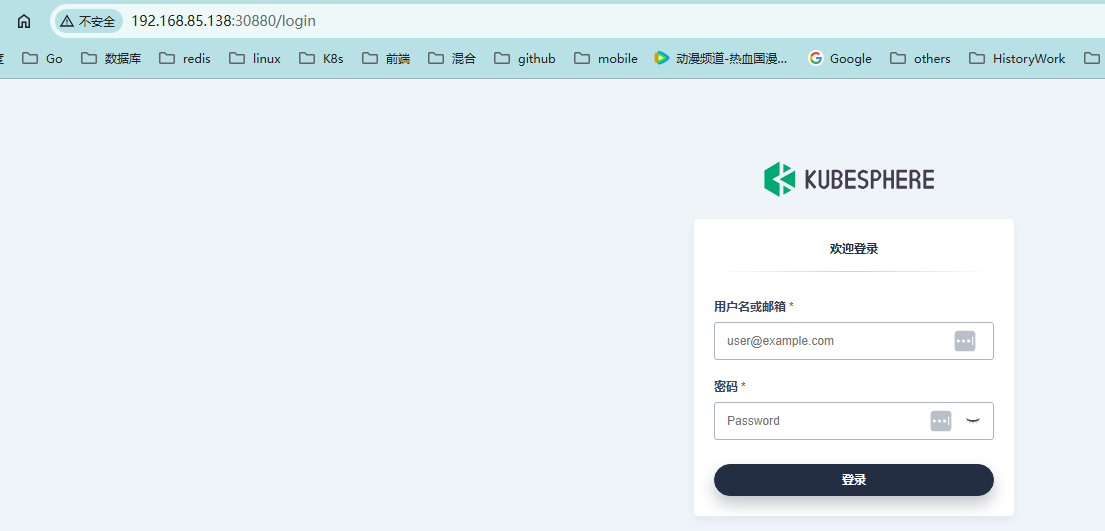

登录页面

初次登录需要换密码,如果不想换也可以继续填写P@88w0rd,不过建议更换

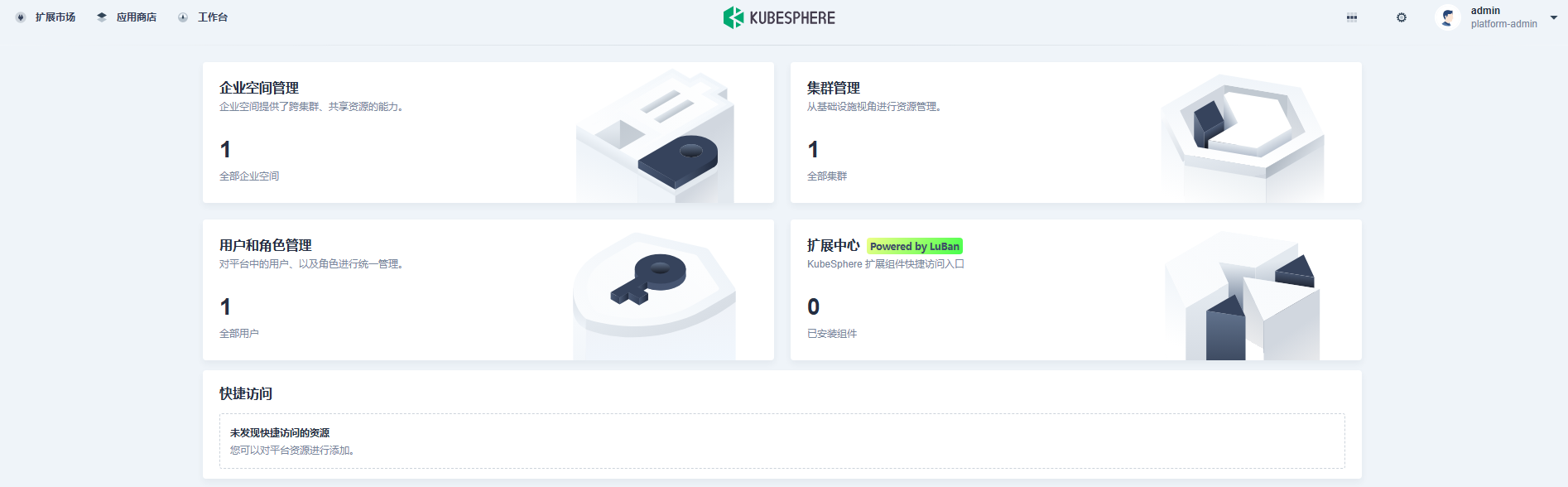

首页

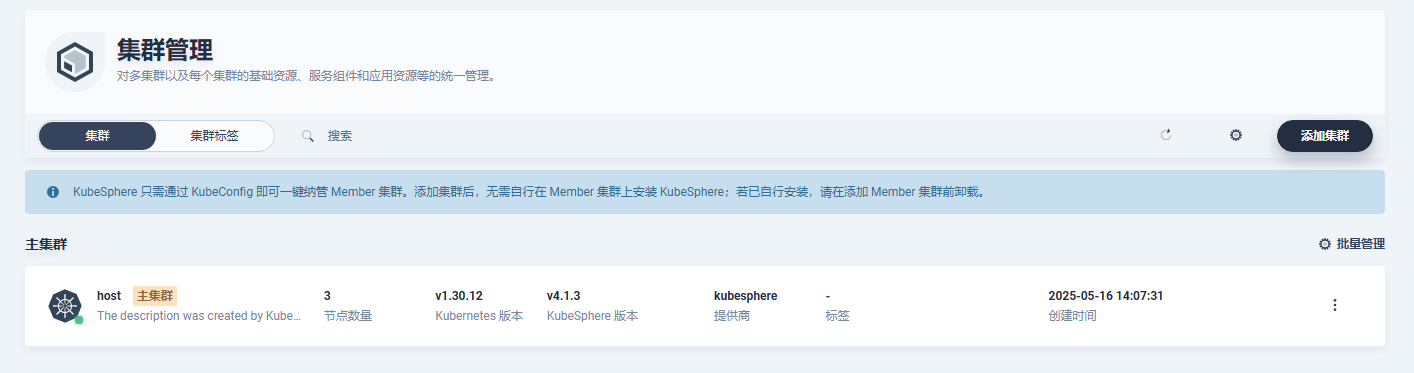

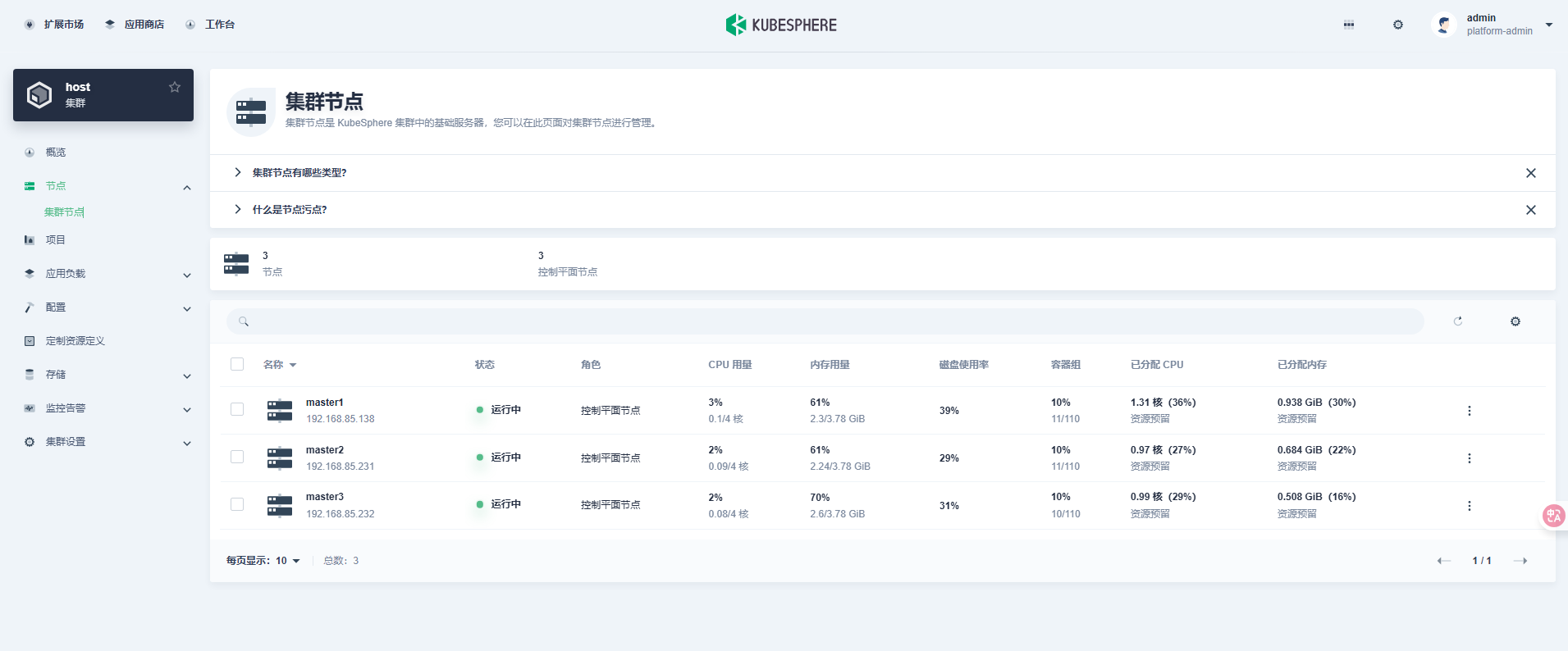

集群节点版本信息

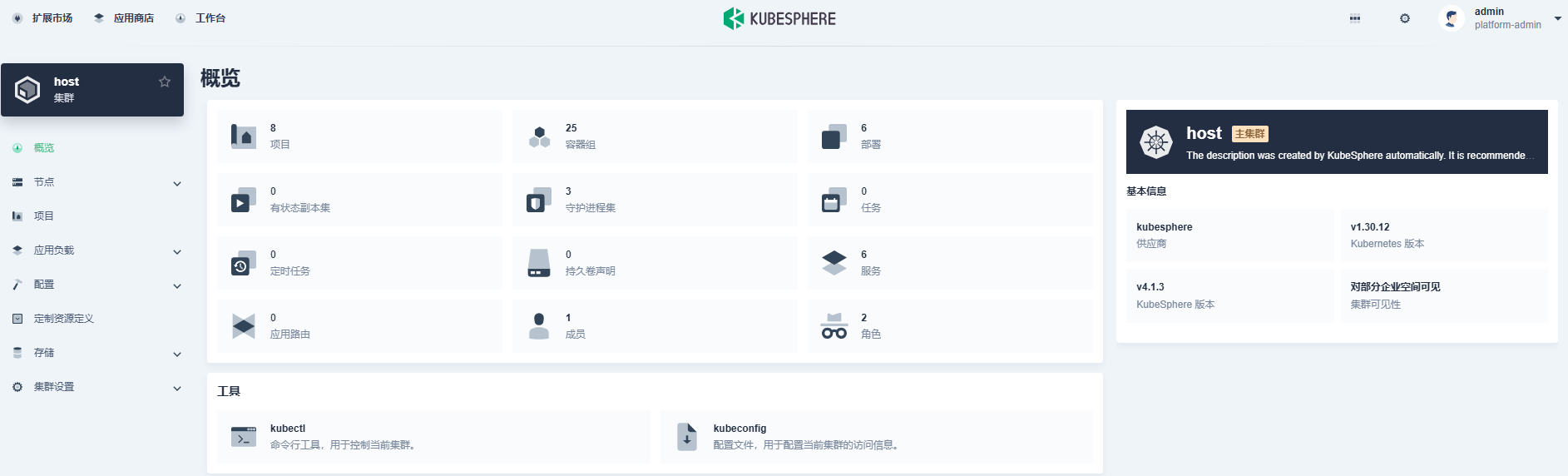

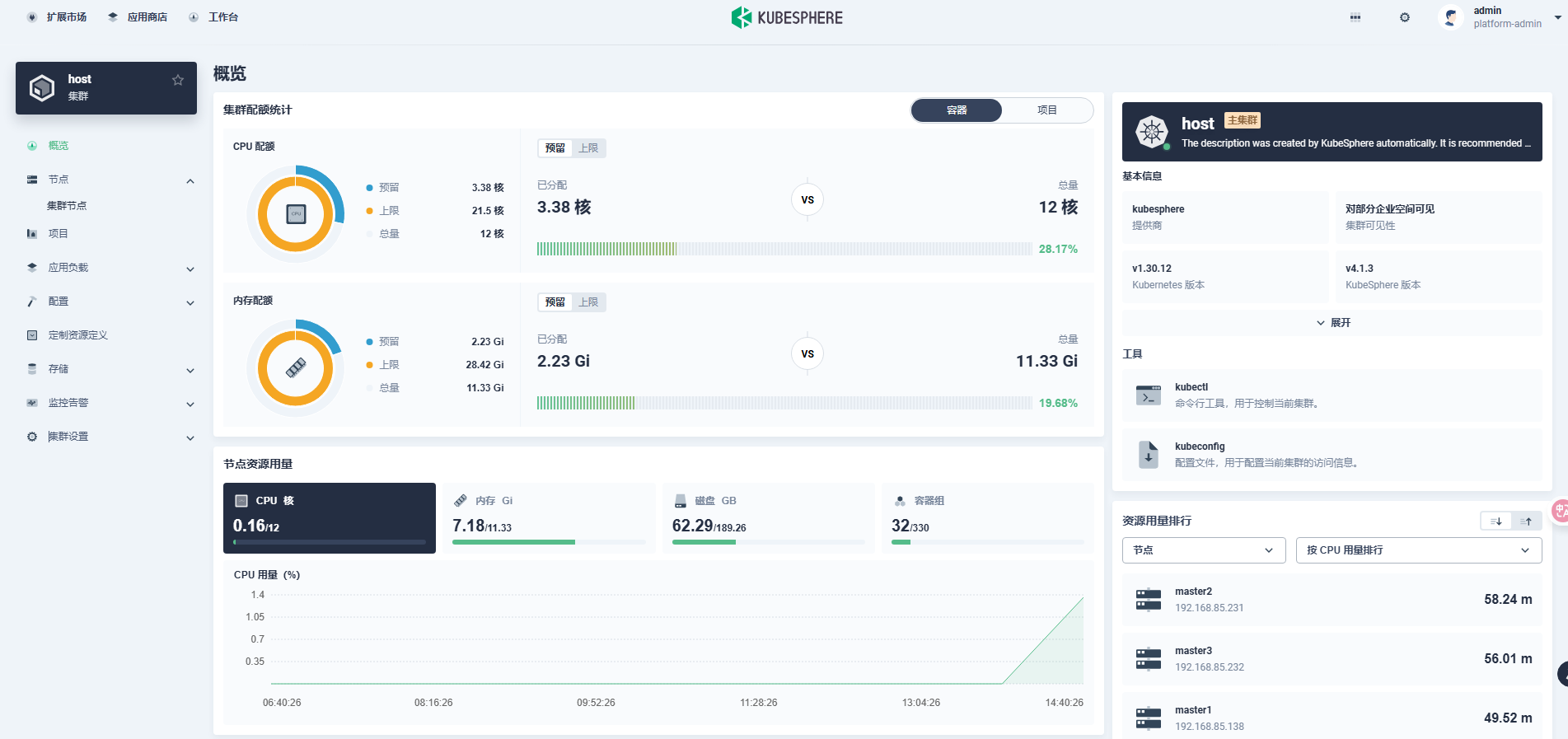

概览

8 安装监控组件

8.1 安装平台服务

点击左上角扩展市场后点击WhizardTelemetry 平台服务

然后点击安装

点击开始安装

8.2 安装监控

点击扩展市场后点击WhizardTelemetry 监控的管理

然后点击安装,建议安装1.1.1版本

安装至host节点

8.3 验证

等待以上服务安装完成后,退出登录,重新登录

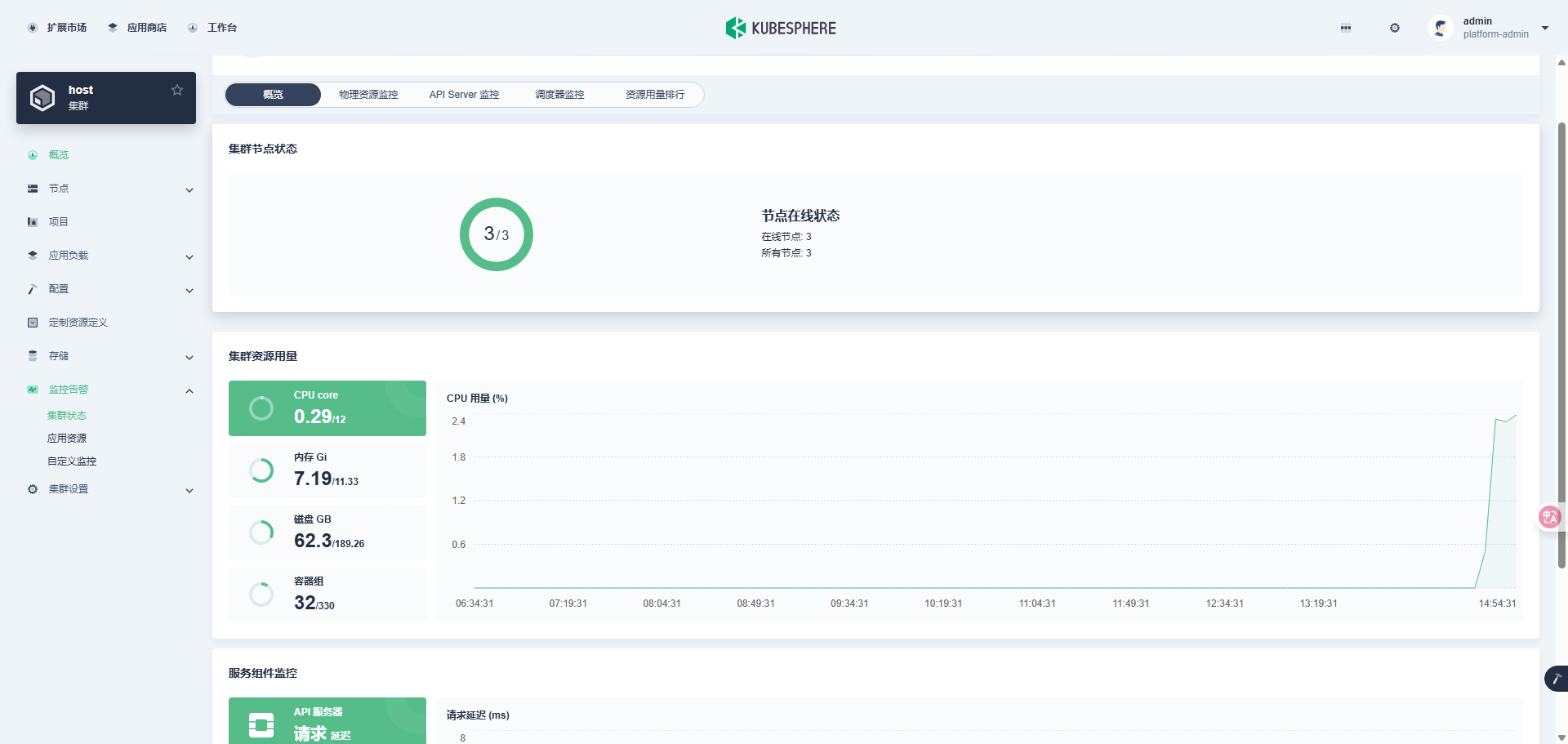

- 概览

- 集群节点

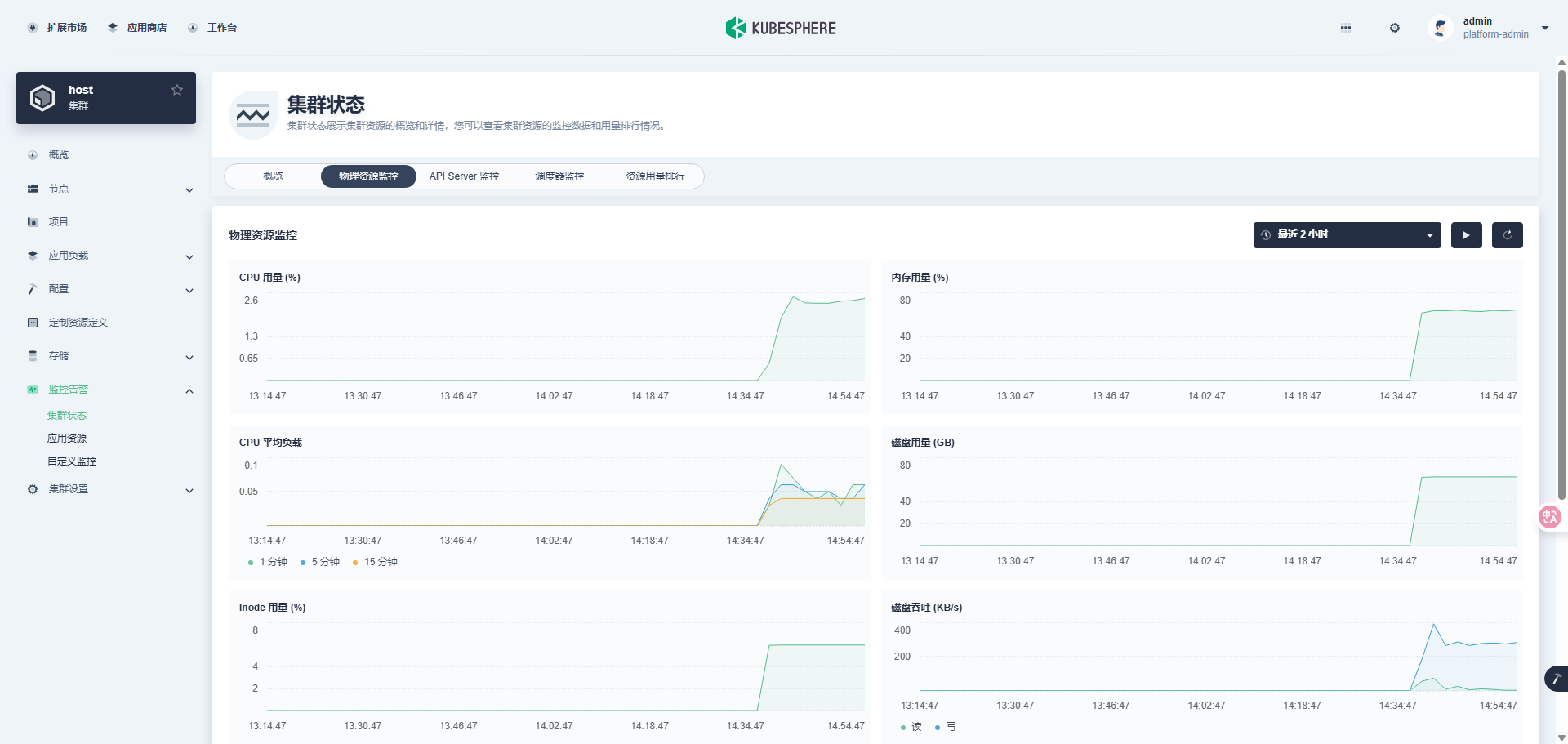

- 监控告警-集群状态

可以看到之前KubeSphere3.*版本的熟悉界面

评论区